When a problem with one or more call detail records is encountered during collection and processing the offending records will be written to a .dat file that is then zipped up and stored in the /error directory.

The name of the zip files contains the date and time the file was created. This can be used to identify when the file was processed. Once the issue preventing the files from being processed and stored has been resolved the files can be unzipped and placed back into the CDR directory.

You can use these instructions to bulk unzip and reprocess files from the error directory in a Linux environment.

- Connect to the CLI and change the directory to the path where the error files reside. Your path might be different than the example below. The error directory resides under the CDR File Directory. In the example below the CDR directory is /opt/variphy/data/cluster1.

cd /opt/variphy/data/cluster1/error

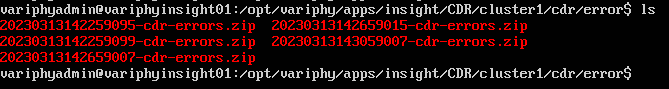

- Display the contents of the error directory using the

lscommand.

- This step is optional. If you want to check for CDR files from a previous unzipping run the following command.

find . -type f -name "*.dat"

- If old CDR files are identified they can be cleaned up (deleted) with this command

rm *.dat

- Run the command to unzip the files. The syntax of the command will depend on your situation. A few examples are below.

- If you want to unzip all of the files it will look like this:

unzip "*.zip*

- If you want to unzip all of the files from 2023 it will look like this:

unzip "2023*.zip"

- If you want to unzip all of the files from March 2023 it will look like this:

unzip "202303*.zip"

- Rerun the

lscommand to review the contents of the error directory. You should see a mix of .zip, .dat and .json files. The .json files are not needed, you can delete them using therm *.jsoncommand. - Move the .dat files to the CDR File Directory you identified step 1 using the

mvcommand. Since the directory is up one level from the error directory you can use..instead of the entire path. This assumes you are still in the error directory, and you should be if you followed the instructions.

mv *.dat ..

This is the same as

mv *.dat /opt/variphy/data/cluster1

The next time the Variphy software collects data those .dat files will be grabbed and the data inserted into the database. You can monitor the progress through the cdr-processing.log file.